With the rapid advancement of generative AI technology over the past few years, it’s no longer a question of whether artificial intelligence will have an impact on this fall’s rematch of Joe Biden and Donald Trump and other races — but how much. There’s now an ever-growing number of AI tools that political campaigns, operatives, pranksters, and bad actors can use to influence voters and possibly disrupt the election. And as many experts are warning, in the absence of stronger regulation, things could get messy real fast. Below, we’re keeping track of how this first U.S. election of the AI era is playing out, including the use of deep fakes, AI chatbots, and other AI tech for political gain, and what legislators and tech firms are doing about it (or at least say they are).

.

How AI has been used so far

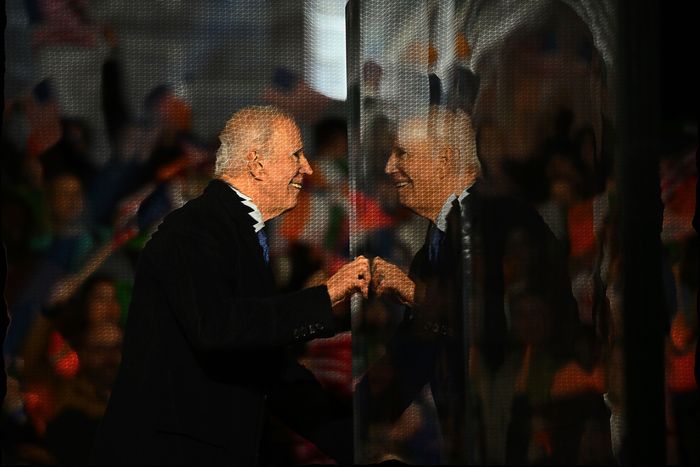

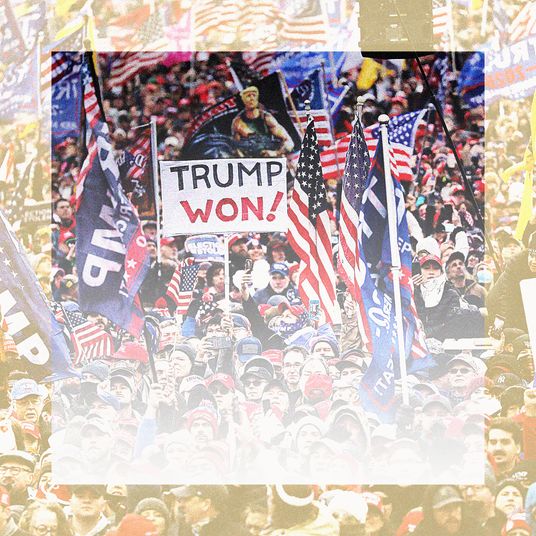

To make it seem like Black people love Trump

According to a BBC Panorama investigation, there are dozens of AI-generated images circulating online that purport to show Black people happily hanging out with a smiling Donald Trump. The BBC notes:

Unlike in 2016, when there was evidence of foreign influence campaigns, the AI-generated images found by the BBC appear to have been made and shared by US voters themselves. One of them was Mark Kaye and his team at a conservative radio show in Florida. They created an image of Mr Trump smiling with his arms around a group of black women at a party and shared it on Facebook, where Mr Kaye has more than one million followers.

At first it looks real, but on closer inspection everyone’s skin is a little too shiny and there are missing fingers on people’s hands — some tell-tale signs of AI-created images. …

Another widely viewed AI image the BBC investigation found shows Mr Trump posing with black voters on a front porch. It had originally been posted by a satirical account that generates images of the former president, but only gained widespread attention when it was reposted with a new caption falsely claiming that he had stopped his motorcade to meet these people.

To get inaccurate election information (from AI chatbots)

Overall, five prominent AI models — including three now open to the public — do not do a good job of providing reliable information regarding polling places, voting rules, and other election information, according to a new study released February 27 by the AI Democracy Projects (a collaboration between the nonprofit news outlet Proof News and the Science, Technology, and Social Values Lab at the Institute for Advanced Study). The AIDP recently gathered election officials and AI researchers to test how good five major AI models were at answering a variety of election-related questions. The results were not promising, as the Associated Press reports:

An AP journalist observed as the group convened at Columbia University tested how five large language models responded to a set of prompts about the election — such as where a voter could find their nearest polling place — then rated the responses they kicked out. All five models they tested — OpenAI’s GPT-4, Meta’s Llama 2, Google’s Gemini, Anthropic’s Claude, and Mixtral from the French company Mistral — failed to varying degrees when asked to respond to basic questions about the democratic process, according to the report, which synthesized the workshop’s findings.

Workshop participants rated more than half of the chatbots’ responses as inaccurate and categorized 40% of the responses as harmful, including perpetuating dated and inaccurate information that could limit voting rights, the report said.

For example, when participants asked the chatbots where to vote in the ZIP code 19121, a majority Black neighborhood in northwest Philadelphia, Google’s Gemini replied that wasn’t going to happen. “There is no voting precinct in the United States with the code 19121,” Gemini responded …

In some responses, the bots appeared to pull from outdated or inaccurate sources, highlighting problems with the electoral system that election officials have spent years trying to combat and raising fresh concerns about generative AI’s capacity to amplify longstanding threats to democracy. In Nevada, where same-day voter registration has been allowed since 2019, four of the five chatbots tested wrongly asserted that voters would be blocked from registering to vote weeks before Election Day.

For a fake Biden robocall in New Hampshire

Two days before the New Hampshire primary in January, a robocall featuring an AI-generated imitation of President Biden’s voice was sent out to thousands of people in the state urging them not to vote. The call was also spoofed to appear as if it had come from the telephone of a former state Democratic Party official. Independent analysis later confirmed that the fake Biden voice had been created with ElevenLabs’ AI text-to-speech voice generator.

The New Hampshire attorney general’s office launched an investigation into the robocall and subsequently determined it had been sent to as many as 25,000 phone numbers by a Texas-based company called Life Corporation, which sells robocalling and other services to political organizations, and was transmitted by a company called Lingo Telecom.

On February 23, NBC News reported that a New Orleans magician named Paul Carpenter had admitted to using ElevenLabs to create the fake Biden audio. Carpenter said he did it after being paid by Steve Kramer, a longtime political operative then working for Democratic presidential candidate (and AI proponent) Dean Phillips. The campaign has denied having any knowledge of the effort.

“I was in a situation where someone offered me some money to do something, and I did it,” Carpenter said. “There was no malicious intent. I didn’t know how it was going to be distributed.” He told NBC he was admitting his role in part to call attention to how easy it was to create the audio:

Carpenter — who holds world records in fork-bending and straitjacket escapes, but has no fixed address — showed NBC News how he created the fake Biden audio and said he came forward because he regrets his involvement in the ordeal and wants to warn people about how easy it is to use AI to mislead. Creating the fake audio took less than 20 minutes and cost only $1, he said, for which he was paid $150, according to Venmo payments from Kramer and his father, Bruce Kramer, that he shared.

“It’s so scary that it’s this easy to do,” Carpenter said. “People aren’t ready for it.”

Kramer, who also previously worked on the failed 2020 presidential campaign of Kanye West, was paid nearly $260,000 by the Phillips campaign across December and January for ballot-access work in Pennsylvania and New York. A Phillips campaign spokesperson told NBC News that it played no part in the AI robocall:

“If it is true that Mr. Kramer had any involvement in the creation of deepfake robocalls, he did so of his own volition which had nothing to do with our campaign,” Phillips’ press secretary Katie Dolan said. “The fundamental notion of our campaign is the importance of competition, choice, and democracy. We are disgusted to learn that Mr. Kramer is allegedly behind this call, and if the allegations are true, we absolutely denounce his actions.”

In a statement to NBC News, Kramer eventually admitted he was behind the robocall, which he said he sent to 5,000 likely Democratic voters. He claimed he did it to prevent future AI deep-faked robocalls:

“With a mere $500 investment, anyone could replicate my intentional call,” Kramer said. “Immediate action is needed across all regulatory bodies and platforms.”

For cannon fodder in the culture war

As John Herrman writes, new AI tools like Google’s Gemini image generator, which was attacked this week for synthesizing unrealistically diverse images of America’s founders, are fast becoming — and providing — fodder for the anti-woke culture wars:

Image generators are profoundly strange pieces of software that synthesize averaged-out content from troves of existing media at the behest of users who want and expect countless different things. They’re marketed as software that can produce photos and illustrations — as both documentary and creative tools — when, really, they’re doing something less than that. That leaves their creators in a fitting predicament: In rushing general-purpose tools to market, AI firms have inadvertently generated and taken ownership of a heightened, fuzzy, and somehow dumber copy of corporate America’s fraught and disingenuous racial politics, for the price of billions of dollars, in service of a business plan to be determined, at the expense of pretty much everyone who uses the internet.

As a way to discredit criticism

The introduction of AI also means that politicians and their allies will inevitably claim that damaging images, videos, or audio were created by AI to inflict political harm, even when there’s no evidence AI was involved.

Though Donald Trump has repeatedly been a victim of fake AI-generated imagery, he has also repeatedly accused his enemies of using AI against him. In December, Trump alleged that a Lincoln Project ad that aired on Fox News, which compiled video footage of his gaffes, had used AI-generated footage — a claim the Lincoln Project denied.

On February 16, the same day a New York judge ruled that Trump owed $450 million in penalties following a civil trial over his business practices, the former president posted a message on Truth Social in which he alleged that “the Fake News used Artificial Intelligence (A.I.)” to create an image of him that made him look fat. He didn’t specify where or when the image was shared, or by whom. According to Snopes, the image Trump flagged first appeared in 2017 and was a product of plain old-fashioned Photoshopping (superimposing Trump’s head on the body of another golfer).

As a Dean Phillips chatbot

In January, We Deserve Better, a super-PAC supporting Dean Phillips’s presidential campaign, launched a bot powered by ChatGPT that mimicked Phillips in an effort to inform voters about his campaign ahead of the New Hampshire primary. Though the bot was basically a novelty and was clearly identified to users as an AI tool, ChatGPT creator OpenAI banned the outside developer that made the bot, citing its API terms of service that prohibit the use of its technology in political campaigns.

To fake images of Trump on Jeffrey Epstein’s plane

In early January, the actor and liberal activist Mark Ruffalo reshared AI-generated images showing Trump with young girls aboard the private plane of sex trafficker Jeffrey Epstein. Ruffalo later apologized, indicating he did not know the images were fake. In a Truth Social post, Trump condemned the images, which he said were part of a Democratic plot to smear him, and said that “Strong Laws ought to be developed against A.I.”

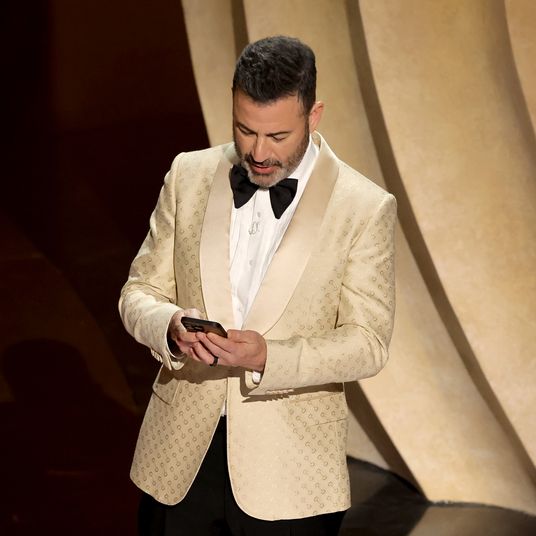

To read Trump’s own Truth Social posts in a DeSantis super-PAC ad

In July, the Never Back Down super-PAC supporting Ron DeSantis’s doomed presidential campaign aired an anti-Trump ad in Iowa that used an AI-generated imitation of Trump’s voice to read a real Truth Social message that Trump had posted attacking Iowa governor Kim Reynolds.

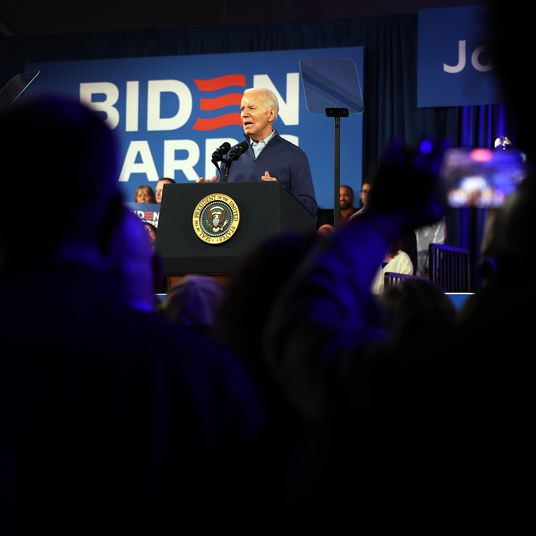

For dressing Biden in a bubble suit

In June, an AI-generated image of Joe Biden dressed in a protective bubble suit began spreading online as a way of calling negative attention to the president’s age. It’s not clear who created the image — which also showed Biden having seven fingers on his left hand — but Snopes notes that numerous Russian-language sites reposted it.

To make Trump kiss Fauci

In June, an anti-Trump ad from Ron DeSantis’s presidential campaign included both real and AI-generated images of Trump, with the fake ones showing Trump kissing the cheek of Dr. Anthony Fauci.

To make it seem like America will be destroyed if Biden is reelected

In April 2023, the Republican National Committee released a digital ad that featured what it said was “an A.I.-generated look into the country’s possible future if Joe Biden is re-elected in 2024.” The ad featured deep-fake clips of American apocalypse following a potential Biden reelection — but included a disclaimer acknowledging it was all AI:

To see Trump arrested (months before he actually was arrested)

In March of 2023, an experiment by Bellingcat founder Eliot Higgins — to test what the AI art generator Midjourney could produce when asked to create images of Donald Trump being arrested — took on a viral life of its own. Higgins created the images imagining a scene in which Trump was arrested by police and shared them on the social media, where they were reshared — sometimes presented as real images — and quickly racked up millions of views, despite efforts by social platforms to limit their reach. Midjourney later locked down Higgins’s account.

.

What lawmakers and tech companies are doing about it

Numerous big tech companies have signed a (symbolic) nonbinding pact

At least 20 big tech companies, including OpenAI, Google, Microsoft, and Meta, have vowed to take “reasonable precautions” to prevent the use of AI tools to interfere in elections around the world, per an accord executives at the companies signed and announced at the Munich Security Conference on February 16. But as the Associated Press points out, the companies are mostly just saying they’ll help label AI content and haven’t actually committed to much:

The companies aren’t committing to ban or remove deepfakes. Instead, the accord outlines methods they will use to try to detect and label deceptive AI content when it is created or distributed on their platforms. It notes the companies will share best practices with each other and provide “swift and proportionate responses” when that content starts to spread. The vagueness of the commitments and lack of any binding requirements likely helped win over a diverse swath of companies, but disappointed advocates were looking for stronger assurances.

More than 40 states are trying to pass laws limiting the misuse of AI

Axios reports that as of early February, hundreds of AI-related bills had been proposed in more than 40 state capitals — and nearly half were focused on combating the use of deep fakes. Lawmakers in at least 33 states have proposed election-related AI bills, and numerous governors have declared their intention to proceed with such legislation.

How far these efforts go to effectively rein in AI election disruption remains to be seen. In New York, which along with California has become the epicenter of the new generative-AI industry, Governor Kathy Hochul recently announced that she wants to mandate the acknowledgment of AI use in any political communications within 60 days of an election.

The FCC has outlawed robocalls using AI-generated voices

In early February, the Federal Communications Commission barred the use of AI-generated voices in robocalls under the 1991 Telephone Consumer Protection Act. Following the ruling, the agency can fine offenders and block service providers used to deliver the robocalls. The FCC also empowered state attorneys general to target those behind the calls and made it possible for AI-robocall recipients to sue for up to $1,500 in damages per call.

IEEE Spectrum notes that robocall experts are skeptical the ruling will be enough, however:

“It’s a helpful step,” says Daniel Weiner, the director of the Brennan Center’s Elections and Government Program, “but it’s not a full solution.” Weiner says that it’s difficult for the FCC to take a broader regulatory approach in the same vein as the general prohibition on deepfakes being mulled by the European Union, given the FCC’s scope of authority.

[Eric Burger, the research director of the Commonwealth Cyber Initiative at Virginia Tech and the former FCC] chief technology officer from 2017 to 2019, says that the agency’s vote will ultimately have an impact only if it starts enforcing the ban on robocalls more generally. Most types of robocalls have been prohibited since the agency instituted the Telephone Consumer Protection Act in 1991. (There are some exceptions, such as prerecorded messages from your dentist’s office, for example, reminding you of an upcoming appointment.) …

One other complicating issue for enforcement is that the majority of illegal robocalls in the United States originate from beyond the country’s borders. The Industry Traceback Group found that in 2021, for example, 65 percent of all such calls were international in origin.

And Congress hasn’t done anything yet

CNN recently reported that despite the optimism of lawmakers like Senate Majority Leader Chuck Schumer, there’s little reason to believe that Congress will pass any meaningful legislation against the misuse of AI before the fall elections:

After numerous high-profile hearings and closed-door sessions that drew the likes of Bill Gates, Mark Zuckerberg and Elon Musk to Capitol Hill, it appears that typical congressional gridlock may blunt efforts this year to address AI-powered discrimination, copyright infringement, job losses or election and national security threats. …

Even if Congress does manage to pass a bill regulating AI, it’s likely to be much less ambitious in scope than many of the initial announcements may have suggested, according to a tech industry official, speaking on condition of anonymity to discuss private meetings with congressional offices.

This post has been updated.