Artificial intelligence is advancing at a superhuman pace. Since the release of ChatGPT last November, I learned how to stop overcooking salmon. Over that same time period, OpenAI’s text-bot learned how to instantly adapt a drawing into a working website, build a Pong-like video game in under 60 seconds, ace the bar exam, and generate recipes based on photographs of the food left in your fridge.

The frenetic pace of AI’s progress can be disorienting. You may be confused about the differences between the various AI models or anxious about their implications for your future employability. You might even be tempted to direct questions about these matters to a chatbot instead of Googling them the old-fashioned way. But why pose your exact queries to a superintelligent machine when you could instead read answers to somewhat similar questions by a moderately intelligent human?

Here is everything you need to know about the rapidly accelerating AI revolution.

What is GPT-4?

GPT-4 is to autocomplete as you are to a rhesus monkey. Which is to say, OpenAI’s latest generative pre-trained transformer (or GPT) is a highly evolved version of a fairly simple creature. Like the autocomplete function that’s been finishing your text messages and Google searches for years, GPT-4 is a machine that probabilistically determines which word is most likely to follow from the words that it’s presented with.

But unlike the (often wrong) text-message coach on your smartphone, GPT-4 has been trained on more than 1,000 terabytes of data. By analyzing an entire internet’s worth of text, the robot has discerned intricate probabilistic rules governing which words and images go together. These rules are not all known to its creators; large language models are a bit of a “black box.” We don’t know precisely how they know what they know. But they know a bit more every day.

What’s the difference between GPT-4 and ChatGPT?

There are two big differences. One is that ChatGPT is an application, while GPT-4 is a general technology. If the former is a piece of software, the latter is a hard drive. GPT-4 can power a chatbot. But it can also be applied to myriad other purposes. Start-ups are using GPT-4 to, among other things, instantly draft lawsuits against tele-scammers, teach foreign languages, and describe the world for blind people. Meanwhile, computer programmers are using the model to automate the more menial and time-intensive parts of their jobs, enabling them to churn out simple apps and video games in an hour or less.

Separately, the version of ChatGPT that’s open to the public runs on GPT-3.5, an earlier and less powerful version of the technology. When GPT-3.5 took the AP Calculus exam, its score put it in the bottom 10 percent of all test-takers. When GPT-4 did the same, it outperformed 90 percent of human dweebs. GPT-4 speaks Swahili better than GPT-3.5 speaks English. The latter can process six-page-long requests; the former can process 50-page ones. GPT-4 can generate text in response to images; GPT-3.5 can’t.

If AI is improving this rapidly, is anyone’s job safe?

In the near term, AI won’t fully replace humans in any profession. But it will likely increase productivity — and thus plausibly reduce the need for human workers — in a wide variety of fields. What once took a team of coders might now only require two. A small law firm that currently pays several paralegals to synthesize vast troves of information into a legal brief might be able to get by with AI and one human supervisor. And the same may be true of companies in the fields of market research, customer service, finance, accounting, and graphic design. Even medicine might not be immune; it’s plausible that we will soon have AIs that read X-rays better than human radiologists.

Did you forget journalism?

Well, journalists’ jobs have never been safe!

They’re less safe now, though, right?

Yeah. GPT-4 can obviously “research and write” exponentially faster than a human journalist, and on a wider range of topics. And it is less vulnerable to errors than GPT-3.5. Some outlets are already publishing AI-generated content.

Still, writers should be able to keep ourselves relevant by getting creative. Really, we just need to lean into the most inextricably human parts of our craft. For example, if you’re assigned a Q&A-style explainer on GPT-4, you might want to let it devolve into a conversation with yourself about AI-related insecurities and anxieties.

So, the key advantage that human writers have over AI is that they can be more self-indulgent?

That’s a loaded way of putting it. Is Charlie Kaufman “self-indulgent”?

Yes.

Anyway, the point is that GPT-4 might be able to outperform journalists on some dry, generic assignments. But its lack of self-consciousness and reflexivity prevents it from excelling at more creative projects. GPT-4 can generate language. But it can’t truly think. It can only regurgitate text it finds on the internet in novel combinations. Basically, it offers a facsimile of expertise that’s belied by the glibness of its analysis and occasional errors.

Isn’t that what you do?

Ha.

But really. Isn’t “regurgitating text you find on the internet in novel combinations” the core competency of an “explanatory” journalist?

There are obvious distinctions between what I do and what a large language model does.

Right. GPT-4 can synthesize insights from 1,000 terabytes of information. The top of this post synthesizes facts from a handful of articles you read.

I also listened to some podcasts. Anyway, my writing does a bit more than that, because I can actually think. The human mind is no mere machine for probabilistically determining the next word in a—

… sentence?

We’re getting off track. For now, the whole debate about whether AIs can be truly sentient is a distraction. Even if this technology isn’t (and never will be) anything more than a “stochastic parrot,” it could still fundamentally transform our lives. GPT-4 is already intelligent enough to replace a wide variety of workers who previously thought themselves invulnerable to automation. AI chatbots and voice imitators are already realistic enough to convince impressionable old folks that they’re speaking with their kidnapped grandkids. AI-generated “deep-fake” videos are already being used in Chinese state propaganda. And these models are getting better at breakneck speed. Investment is pouring into AI, and firms the world over are racing with each other for shares of a vast pool of capital.

These machines might never be capable of genuine reasoning. But for many practical purposes, that won’t matter. “Autocomplete on acid” certainly looks sufficient to rapidly remake white-collar employment and render actual videos largely indistinguishable from fake ones. Given the already precarious state of America’s social fabric and political economy, that’s alarming. And that’s before we get into the really “out there,” medium-to-long-term scenarios.

Like the robot apocalypse?

That’s one of them. Last year, a survey asked a group of AI experts what probability they would place on AI systems “causing human extinction or similarly permanent and severe disempowerment of the human species.” The median answer was 10 percent. Which might say more about the neuroses or self-importance of AI researchers than it does about the actual threat. At least, that’s what I would have told you a few months ago. Now I’m not so sure. After all, this thing made Pong in 60 seconds.

Again, AIs don’t need to become “conscious” in the way that humans are to wreak catastrophic harm. Remember, these models are a black box. We don’t know how they fulfill our requests.

Imagine that some corporation’s HR department acquires an AI 20 times as powerful as GPT-4, and asks it to help the firm lower its health-care costs. Maybe that AI (1) examines the data and discerns that a key determinant of those costs is the health of a firm’s workforce, (2) locates the medical records of all current employees and identifies the most prolific consumers of medical care, (3) hacks into their computers and fills them with stolen documents, and then (4) rats them out to the FBI.

You’re worried AI is going to frame you for corporate espionage if you don’t take up jogging?

The point is there’s lots of ways that a powerful AI with inscrutable methods could have damaging impacts, even if it is totally committed to serving our ends. And those damaging impacts could theoretically be cataclysmic. Ask an AI to help you minimize global warming, and maybe it hacks into the world’s atomic arsenals to unleash nuclear winter.

This sounds like “The Monkey’s Paw” or a Twilight Zone plot.

I know. And the utopian talk about the impending “singularity” sounds like a cyberpunk version of millenarian Christianity. Until recently, I was pretty comfortable writing all this off as Gnosticism for atheistic computer nerds. I still feel sheepish about buying into the hype around self-driving cars. And yet, if you asked me a year ago how long it would take humanity to develop an AI that could turn handwritten drawings into functioning websites, and single-sentence prompts into halfway decent parody songs, I would have said something in between “not for a while” and “never.”

That may say more about your ignorance of this subject than it does about the past year of AI progress.

Yeah, maybe. It’s totally possible that this exponential growth in AI’s capabilities will prove fleeting. It may be that there’s a hard ceiling on what this form of intelligence can achieve. According to one 2022 paper, AI models have been growing their training-data sets by roughly 50 percent a year, while the total stock of high-quality language data is growing just 7 percent annually. By 2026, these AIs might effectively finish “reading” every digitized book and article in existence, and the growth in their capacities could therefore slow or stagnate. Although maybe, by that point, the models will be able to generate their own training data.

You’re saying “might” and “maybe” a lot.

Well, I’m feeling uncertain. In fact, I think radical uncertainty is one of the defining characteristics of this moment in history. It’s never been harder for me to picture my own future — even five years out — and feel confidence in what I see. And not only because of AI. Maybe it started with COVID. We all pretend that we’re protagonists in the drama of our lives, and imagine that our life’s outcomes are downstream of our actions. If we indulge our tragic flaws, then we suffer.

If we fuck around, then we find out.

Or else, we cultivate our virtues and then we thrive. But after COVID, it’s hard to unlearn how thoroughly your life’s little narrative can be upended by offstage events. A bat bites a raccoon dog and then you lose a year to quarantine while millions lose their lives.

That raccoon-dog thing doesn’t actually disprove the lab-leak theory—

Let’s not get into it. Anyway, obviously the future has always been uncertain. But it’s harder to ignore that fact today than it was before Donald Trump became president, or COVID went viral.

Or planes flew into the World Trade Center?

Yeah. I admit, some of this feeling might be a peculiarly millennial thing. It’s not like the future was super-certain for my grandparents in 1935. But those of us who were born into the American middle class at “the end of history” were raised on the promise of stasis. All the epochal wars and ideological struggles belonged to the netherworld that preceded your birth, the one conjured by Polaroids of your parents looking stoned in bell-bottoms. In our world, presidents crusaded for school uniforms and equivocated about blowjobs. Technology advanced — the N64 blew away Super Nintendo — but it was still just a linear improvement on what already was. And that was how everything was going to be, assuming we survived Y2K.

“Sure, Grandpa, let’s get you to bed.”

But this isn’t just about being a “’90s kid.” We’re living in unprecedented times. We’ve never had a planet with this many people, or a democracy with media this decentralized and gateless, or an industrial civilization with a climate this warm, or a global population this old, or an American empire with a Chinese rival. Add in superintelligent text-bots that improve at an exponential rate, and it’s hard not to feel a perpetual sense of disorientation.

The far less remarkable innovation of social media proved capable of remaking our societies and selves in ways we could have scarcely anticipated. The way I process information and relate to my social sphere is utterly different than it was 11 years ago, before I created a Twitter account. If I’d tried at that time to imagine what my daily life would be like in the year 2023, I would have been wrong about the fundamentals, not the details. The experience of daily immersion in an endless stream of witty banter, self-righteous sadism, academic squabbles, memes, and thirst traps would be impossible for my younger self to conceive. So, how could I possibly know what my life will be like in, say, 2028, given AI’s current trajectory?

One minute, you’re reading blogs; the next decade, you’re reading microblogs. Transformative stuff.

It was, actually. But yes, the transformative potential of AI is exponentially greater. The AI alarmists aren’t just worried about “misalignment” leading to the apocalypse. They’re also worried about AI bringing about an economic utopia too soon.

Their concern goes like this: If AIs get to the point where they can automate all (or even most) of the basic processes and activities that humans use to advance scientific research, then the pace of technological innovation could abruptly accelerate. And that in turn could set off a positive feedback loop, in which that leap in innovation generates more powerful AIs, which generate further leaps in innovation, such that, over the course of a year or two, humanity makes a technological leap analogous to the one we made over the millennia between the Bronze Age and today.

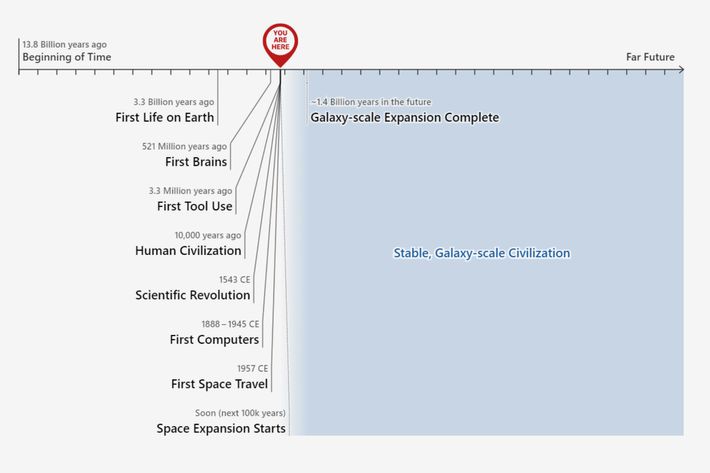

Holden Karnofsky, one of the Effective Altruism movement’s leading AI doomsayers, argues that such an exponential acceleration in humanity’s technological development seems less far-fetched when you examine our current pace of technological change against the backdrop of geological time. Viewed from this perspective, we are already living through a mind-bogglingly rapid surge in economic progress.

If Karnofsky’s prophesied “economic singularity” comes to pass, it would be socially destabilizing and potentially cataclysmic. We aren’t very far from developing biotech tools that make it cheap and easy for a sociopath of middling intelligence to engineer a supervirus in their kitchen. There’s no telling what spectacular acts of nihilistic bloodlust we could enable by compressing a few centuries’ worth of technological developments into a few years.

What does it even mean to “automate all the basic processes and activities that humans use to advance scientific research”? Are the robots going to conduct FDA trials on other robots in the metaverse? Are large-language models going to somehow instantly erect factories? It’s cool this thing can kind of code, but aren’t we getting a little ahead of ourselves?

Probably? But if the EAs’ hyperventilating helps catalyze a push to better regulate these tools, then it will be to the good.

In any case, it’s an interesting idea to sit with. In many ways, the past four decades of American life have been defined by the discontents of low growth. It was the slowdown in postwar rates of economic expansion and productivity that unraveled the New Deal bargain and cleared the path for today’s inegalitarian economic order. And the presumption of scarcity undergirds all current economic debates. To curb inflation, some will have to become poorer. To “save” Medicare and Social Security, seniors will need to wait longer to retire, or else the rich will need to pay higher taxes. Meanwhile, many in the economics profession take it as a given that “advanced” economies with aging populations cannot hope to grow at their mid-20th-century rates.

If even a pale imitation of the “economic singularity” comes to pass — if AI does not propel us to “100 percent” annual GDP growth, but up to, say, 10 percent — then many of our polity’s fundamental conflicts will be transformed. And many of the concerns that I’ve spent my professional life dwelling on may be moot.

Well, that shouldn’t be too big a deal since, at that point, your professional life will be over anyway.

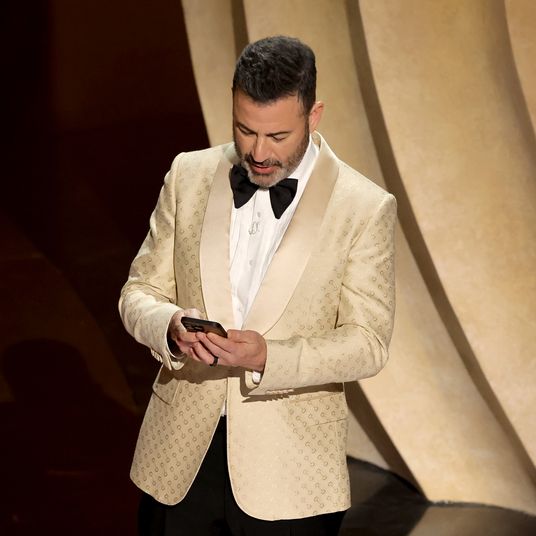

I still cling to the hope that AI will never be able to write a piece this meandering or irritatingly self-deprecating. But when I suggested that I am too creative to be replaced earlier, I was just putting on a brave face. A couple days ago, my boss posted this ChatGPT query into Slack:

Accessibly explaining Fed policy is among the core duties of my gig. Now, an outdated AI can do that in a fraction of the time that I require.

I look at that answer and think, “What if we’re on the cusp of a revenge against the nerds?” Wherein, after decades of technological change that increased the relative value of intellectual over manual skills, that process kicks into reverse? It sure looks like it’s much easier to automate virtually every “laptop job” than it is to build a robot plumber. So, what if this felicity with language I’ve been cultivating since grade school — and which let society forgive my utter lack of practical skills — suddenly loses all its economic value and social esteem? What if I am forced to learn that the habits of mind I’d taken to be singular manifestations of my inimitable self are actually just the outputs of an obsolete (and not-so-large) language model?

I don’t know. Maybe fight for a future in which a person’s social worth and material comfort isn’t contingent on their value in the labor market? Or, ya know, ask ChatGPT.